Table of contents

Definition

Exploratory Data Analysis is an approach to analyzing data sets to summarize their main characteristics, often using statistical graphics and other data visualization methods, according to Wikipedia.

It assists us to identify potential issues with our dataset i.e. missing data, outliers understanding the nature and type of variables, understanding the relationship between the variables and effectively communicating our findings. This helps in advising the company during decision-making as it will be a data-driven approach.

Exploratory Data Analysis Process

The EDA process is similar in all the data science programming languages i.e. R, and Python. The process involves three major steps:

Data Input/ Reading

This involves assigning your data to a programming language object so as to store the data in memory. Each data source has its own way of data input/handling. Example in R and Python:

import pandas as pd df = pd.read_csv('filepath/data.csv')df = read.csv('filepath/data.csv')The method to use is dictated by the data size and format in which it's stored.

Data Cleaning and Analysis

This involves getting a deeper understanding of the data you imported. It will involve:

Identifying missing data points/ data

During data collection, some respondents tend to skip questions or sometimes pick an option and do not give the required input. This tends to bring missing data which would have given better insight. EDA assists in identifying the missing and the size of missing data. The most common name is

null or NaNvariables.Identifying outliers

Outliers are data points that are significantly different from other data points in the dataset. We tend to identify them through quantiles or percentiles. Most outliers are considered to be not between the

(0.1, 0.9) quantile.. The best action always involves dropping the outliers. Example in Python#subseting to get the borders low, high = df['column'].quantile([0.1,0.9]) #assigning to series col = df['column'].between(low,high)Distribution of the data

We query the spread, shape, and central tendency

(mean, standard deviation, variance e.t.c)of the data to get insights into the data distribution. This helps us in making decisions on what statistical test or analysis to use, and also helps in checking data skewness(unbalanced data).Statistical Analysis

A method to perform statistical analysis depends on the data distribution and data types. It involves performing correlations between variables and this aid in understanding concepts such as multicollinearity between the variables.

We also perform statical tests on the data to assist us to answer the hypothesis or objectives we had created.

We can also perform regression analysis on the data set to get more insights into our response variable and independent variables.

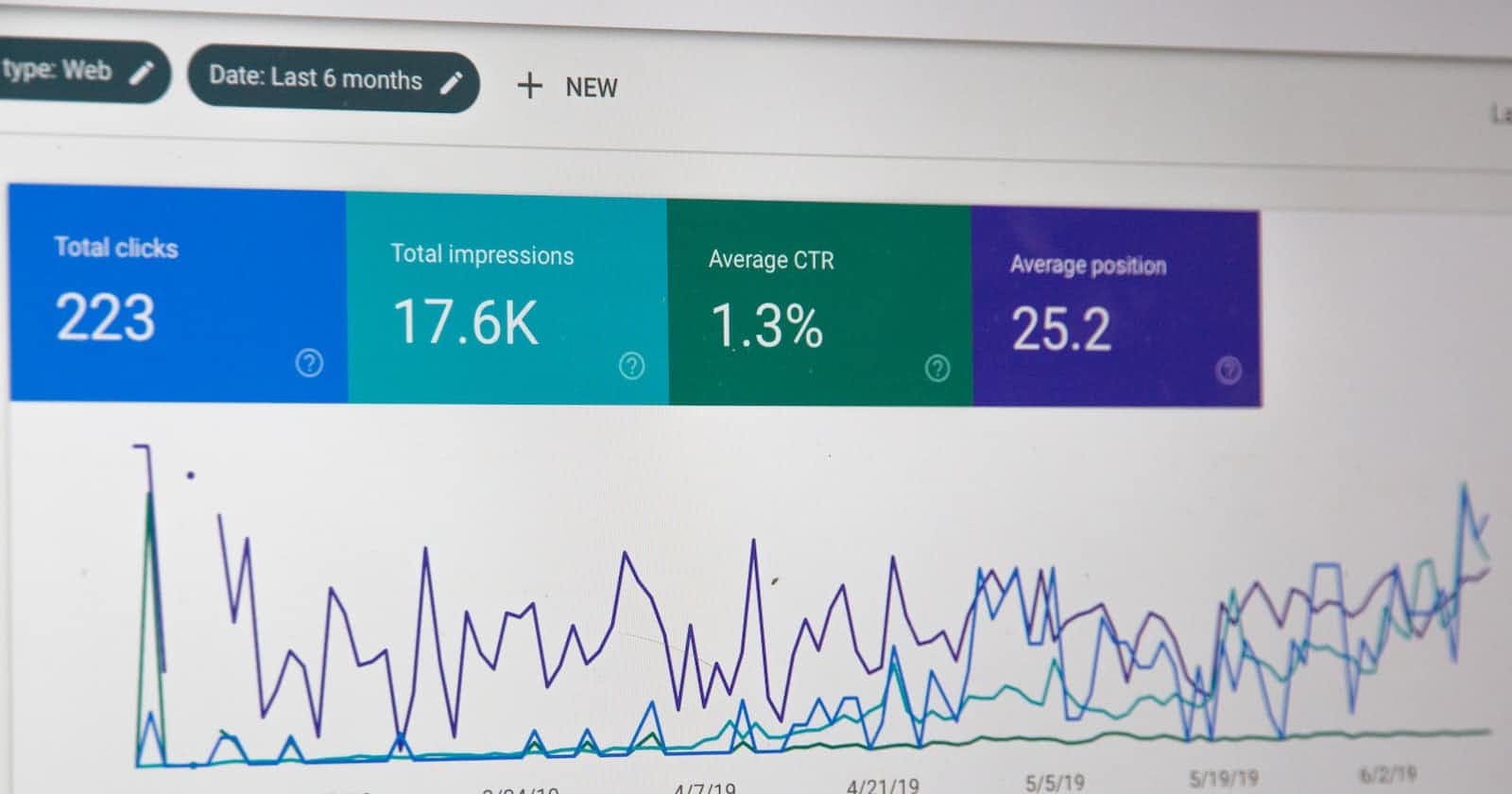

Data Visualization

This is considered the last process of Exploratory Data Analysis.

We visualize our data to identify patterns and trends(time series) which would be difficult with raw data.

It also involves communicating our results in a simple language understandable by the policymakers. We compile our results in pictorial format to highlight major insights and show the data-driven recommendations.

The most common data visualization packages are ggplot2 (R), matplotlib(Python), Seaborn(Python), and plotly(R and Python).

Importance

EDA significance can be majorly classified as:

Data Quality and Better Understanding

It helps in having a better understanding of the data you are working on to identify trends, patterns, or outliers, which are considered anomalies. This aids in planning how to analyze the data and interpret it.

Identifying missing values helps in ensuring we use reliable data.

Communication

The use of visualization and summaries aids in presenting our results. This makes the results more understandable to most people.

Decision Making

EDA can aid in making decisions backed up by data. This gives policymakers a chance to make informed decisions that tend to be more effective and achievable.

Conclusion

EDA is a critical process in data processing in order to get insights about data sets. The insights aid in making data-driven decisions which tends to be effective.

It's essential for a data analyst to master EDA in order to assist policymakers to understand the past, current and best practices for future events.

I hope this ultimate guide serves as a valuable resource for anyone looking to improve their EDA skills.

You can check a sample EDA procedure in this GitHub repository and never feel shy about asking for guidance.